Introduction

The purpose of this post is to describe how to configure an iSCSI SAN in a VMware virtual infrastructure 3.5 with software initiator.

The prerequisites for this instruction are that the network and storage system has been configured and that you have received the following information:

ESX Hosts- ILO IP and credentials

- IP address for ESX host

- IP address for VMotion

- FQDN for the ESX host (should be able to resolve)

- Is ethernet traffic VLAN tagged (then you need VLAN ID) or is it only access ports?

- Subnet, gateway, DNS servers

Storage (typically set up in closed network, 192.168.1.x/24)- IP addresses for the storage targets (typically 2 or 4 targets)

- IP address for the Service Console on ESX

- IP address for VMkernel (iSCSI) on ESX

- Subnet and gateway

- Make sure a LUN is made available by storage group

Read the “iSCSI Design Considerations and Deployment Guide” from VMware for detailed instructions. Just search on Google for it.

Furthermore, ensure that you have two separate NICs in the ESX host that can be used for storage. So, if it’s a Blade, then 4 NIC’s for Ethernet traffic and the two last on mezzanine card 2 for storage. The NICs can be of any type and make since the iSCSI initiator is software based and controlled by ESX on top of the NIC.

Instruction steps0. First, below is a typical storage architecture:

1. In VI client: Make sure the ESX server is licensed for iSCSI and VMotion under Configuration -> Licensed features

1. In VI client: Make sure the ESX server is licensed for iSCSI and VMotion under Configuration -> Licensed features

2. Under Configuration -> Networking add a new virtual switch that will be used for storage. Attach the NIC’s you want to use.

3. Click properties for the new vswitch and add a Service Console 2 (COS2). Give it an ip address and subnet (typically local ip.). This second service console will receive the gateway of the first Service Console (a routable gateway ip). This is fine as it is not to be used in COS2.

4. Click properties for the new vswitch and add a VMkernel which will be used for iSCSI traffic. Label it iSCSI. Type in ip address and subnet.

4. Click properties for the new vswitch and add a VMkernel which will be used for iSCSI traffic. Label it iSCSI. Type in ip address and subnet.

After VMkernel is created enter properties for it and enter VMkernel Default gateway. This gateway ip should be the same as the IP address of COS2. So VMkernel points its gateway to the local service console.

Do not tick the box for VMotion use.

5. When done, the network configuration could like dump below:

6. Make sure the vmkernel has a gateway under “DNS and routing”

6. Make sure the vmkernel has a gateway under “DNS and routing”

7. Go to security profile and enable software iSCSI client through the firewall:

7. Go to security profile and enable software iSCSI client through the firewall:

8. Go to configuration -> storage adapters and click on the vmhba and click “properties”

9. Click Configure and then tick the “Enabled” check box and click OK.

10. On the Properties page for the software iscsi adapter, choose the Dynamic Discovery tab and enter the ip addresses of the storage targets (static targets are not supported for software initiators.)

11. Now, from the storage adapters page, rescan the HBA’s and verify that you see 2 or 4 targets (storage targets)

12. From Configuration -> Storage add the new LUN or LUN’s

13. When you have added a LUN, right click it and choose properties

For a MSA2012i with two Storage Processors (SP’s) with each to ports, there will be 4 targets (Update: In 3.5 U3 I've seen same setup but only two visible targets - but live SP fail-over works fine still). There will be 2 paths (typically on Fiber HBA’s, there are 4 because each HBA is represented with each two paths). With software initiator, there is one logical initiator and then two physical NICs teamed in the vSwitch. The initiator has two paths to two targets on the same SP.

14. Tricks:

- Make sure that all targets can be pinged from COS2. SSH to the ESX host. From the console, SSH to COS2. From there you can ping the targets

- If it’s a HP Blade 3000/7000 enclosure, make sure connections between the two switches used for storage are allowed (done by network department)

- Jumbo Frames: If you are to enable it, remember to change it on all relevant parts: Storage, Network, ESX (on Switch and Port groups). Jumbo frames are not necessarily supported by the physical NIC’s. on the BL460cG1, the built-in NIC’s are supported but the HP NC326m, for example, is not. To enable jumbo frames from console, type following two commands:

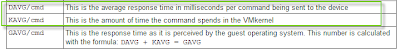

VMkernel command: esxcfg-vmknic -a -i 'ip-address vmkernel' -n 'netmask vmkernel' -m 9000 'portgroupname'

vSwitch command: esxcfg-vswitch -m 9000 'vSwitchX' - Check outgoing ESX traffic: From the console, you can, when you rescan for new HBA’ and VMFS volumes, check if there is any traffic from the ESX to the targets (run command simultaneously with rescan)

- Netstat –an grep 3260

Example:

[root@vmtris001 root]# netstat -an grep 3260

tcp 0 1 192.168.1.12:33787 192.168.1.2:3260 SYN_SENT

tcp 0 0 192.168.1.12:33782 192.168.1.4:3260 TIME_WAIT

tcp 0 1 192.168.1.12:33788 192.168.1.3:3260 SYN_SENT

tcp 0 0 192.168.1.12:33779 192.168.1.1:3260 TIME_WAIT