At current client we have multiple Azure Firewalls running with forced tunneling to on-prem. By default this requires two public IP addresses, one for the data plane (or for customer traffic) and the other other is for service management traffic (exclusively used by the Azure platform).

Since there is no ingress or egress to the internet on these firewalls as all traffic is routed to on-prem, there was a request from the security team to remove the public IP for the data plane (so there will just be one public IP left per firewall).

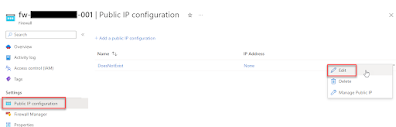

If deploying from the portal or via PowerShell, this cannot be configured during deployment, but can be done post deployment by going the Azure Firewall -> Public IP configuration -> Choose the three dots to the right of the public IP -> choose Edit -> And then choose None so that no public IP is associated., see screenshot below for example.

Note that you cannot choose to delete the public IP directly, and the other option to Manage Public IP also cannot be used to disassociate the public IP (which is perhaps a bit confusing).

Deploy with ARM template

If deploying the firewall using an ARM template, it is possible to configure just one public IP at the time of deployment.

I've put an example template on GitHub that can be used for reference:

fw-no-public-ip.parameters.json

The following resources should be deployed in advance:

- A resource group

- A VNet

- 2 x subnets (AzureFirewallSubnet and AzureFirewallManagementSubnet, both should be /26 in size)

- A firewall policy

References to the subnets and the policy should be updated in the parameters file before running it.

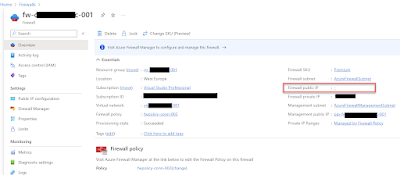

On the second screenshot below you can see the result post deployment and that the FW has no firewall public IP but it does have one for management traffic. On the first screenshot below you can that there is an entry for the public IP called "DoesNotExist" (just an example name) but it is not associated with a public IP.